Facebook under pressure over kids and teens; Congress grills YouTube, TikTok and Snap; FTC prioritizes children and teens, issues 700 penalty notices; Age verification matters

Facebook under pressure over kids and teens

Last month, former Facebook employee Frances Haugen testified before the U.S. Senate, leading lawmakers of both parties to call for tougher laws to curb technology platforms. In her opening statement, Haugen said “I believe that Facebook’s products harm children,” and claimed that the documents she disclosed show that Facebook prioritized profits over safety, especially for vulnerable groups like teenage girls.

As more emerges from the Facebook document leaks, some academic researchers suggest that Facebook’s own data is not as conclusive as it may seem and that the connection to the reported harms may be less reliable than had been suggested by mainstream reporting.

One thing seems certain: lawmakers from both parties are coming together over a shared concern about Facebook’s approach to children and teens. Even Facebook Vice President Nick Clegg acknowledged that when it comes to improving his company’s platform, there are ‘certain things only lawmakers can do’.

As a likely result of all this, we’ve seen UK campaigners demanding online safety for young people. This follows a series of changes to Facebook services including the unveiling of new controls for kids using its platforms, for example, prompting young users to take a break on Instagram, and “nudging” teens if they are repeatedly looking at the same content that’s not conducive to their well-being.

With Facebook’s recent high-profile embrace of the metaverse, the question remains of how it will improve its approach to young audiences. If social media is unsafe for kids, what happens in VR? If its current VR offering, Oculus, is any indication of what to expect, Facebook Meta will have some work to do, since reportedly the product currently lacks content or parental controls to cater to the many kids that use it in spite of the service’s terms limiting use to those over the age of 13.

Congress grills YouTube, TikTok and Snap

After the Facebook fiasco, YouTube, TikTok and Snap were called in to testify before Congress over kids’ safety (a first for TikTok and Snap). Sen. Richard Blumenthal (D-CT) said “Big Tech is having its ‘big tobacco’ moment–a moment of reckoning.” The social media companies were grilled about their efforts to protect children and maintain teen privacy. Unsurprisingly, the companies sought to distance themselves from Facebook and described how they seek to create safe online experiences for kids. The hearings became particularly heated when Senator Blackburn (R-TN) raised concerns about TikTok’s data sharing with the Chinese government.

After the hearings, Senators Blumenthal and Ed Markey (D-MA) called again for the passage of an update to COPPA, which among other things would raise the age of children whose data cannot be collected without consent from 12 to 15. The Senators also urged the passage of legislation that would ban auto-play settings and push alerts designed to encourage tech usage. Their efforts are even attracting Republican support for legislation to rein in Big Tech. We will continue to keep a close eye on developments.

FTC prioritizes children and teens, COPPA review continues

While Congress may finally be poised for action, the FTC has already committed to take on teen privacy. In September, the FTC announced the adoption of streamlined investigation and enforcement procedures (under so-called omnibus resolutions) in certain areas including privacy practices related to children under 18. Omnibus resolutions allow FTC staff to issue civil investigative demands compelling the production of documents with the sign-off of only a single Commissioner.

In another effort to tackle an ambitious agenda, the FTC issued a Notice of Penalty Offenses to over 700 companies about its intent to pursue civil penalties if they engage in conduct deemed unfair or deceptive by the FTC, including fake reviews and misleading endorsements. The FTC doesn’t typically have authority to seek civil penalties for a first violation of the FTC Act. Such authority is only triggered when the Commission has issued a written decision that a company knew its conduct was unfair or deceptive. According to the FTC’s blog, receipt of Notice is not an indication of a violation, in fact most recipients’ practices likely have not been reviewed. Instead, by sending Notice, the Commission is looking to create that requisite knowledge, thereby preparing the ground for civil penalties. If the Commission is forced to litigate these issues, some lawyers question whether the FTC Penalty Offense Authority will be successful.

The FTC has made clear that it is willing and ready to protect the internet’s younger users, a point that hasn’t gone unnoticed by lawmakers. In fact, Senator Markey and Reps. Castor and Trahan recently attempted to capitalize on this by urging the FTC to use its authority to make tech companies abide by their own, updated policies.

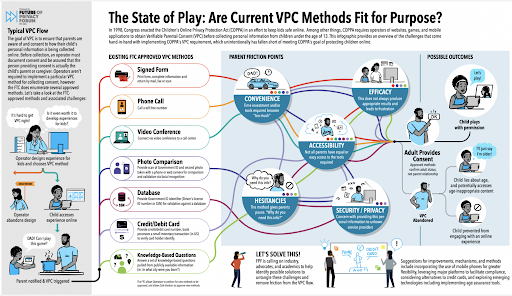

Meanwhile, as the FTC’s review of the COPPA Rule continues, the privacy-advocating nonprofit The Future of Privacy Forum has published a detailed discussion document charting the history and current state of kids’ data privacy laws, and analysing how Verifiable Parental Consent is working in practice. Its objective is to inform lawmakers and regulators as they consider changes to COPPA and other new privacy regulations. It is open for comments until 15 December 2021.

Age verification matters

As a result of pressure from regulators, parents, and activists, businesses and governments worldwide are implementing stricter digital age checks. The vast majority of online age checks require site visitors to submit a date of birth without further evidence. However, that is changing as new regulations—such as the Age Appropriate Design Code in the U.K. and the updated Audio Visual Media Services Directive in Europe—place more responsibility on operators to verify self-declared age.

A number of the new age verification methods being rolled out require users to submit additional personal information, such as government-issued ID or credit card information. Critics like the Electronic Frontier Foundation of such intrusive age checks worry that this will create new databases of exploitable (and hackable) user data and may dampen the free expression that comes with anonymity online.

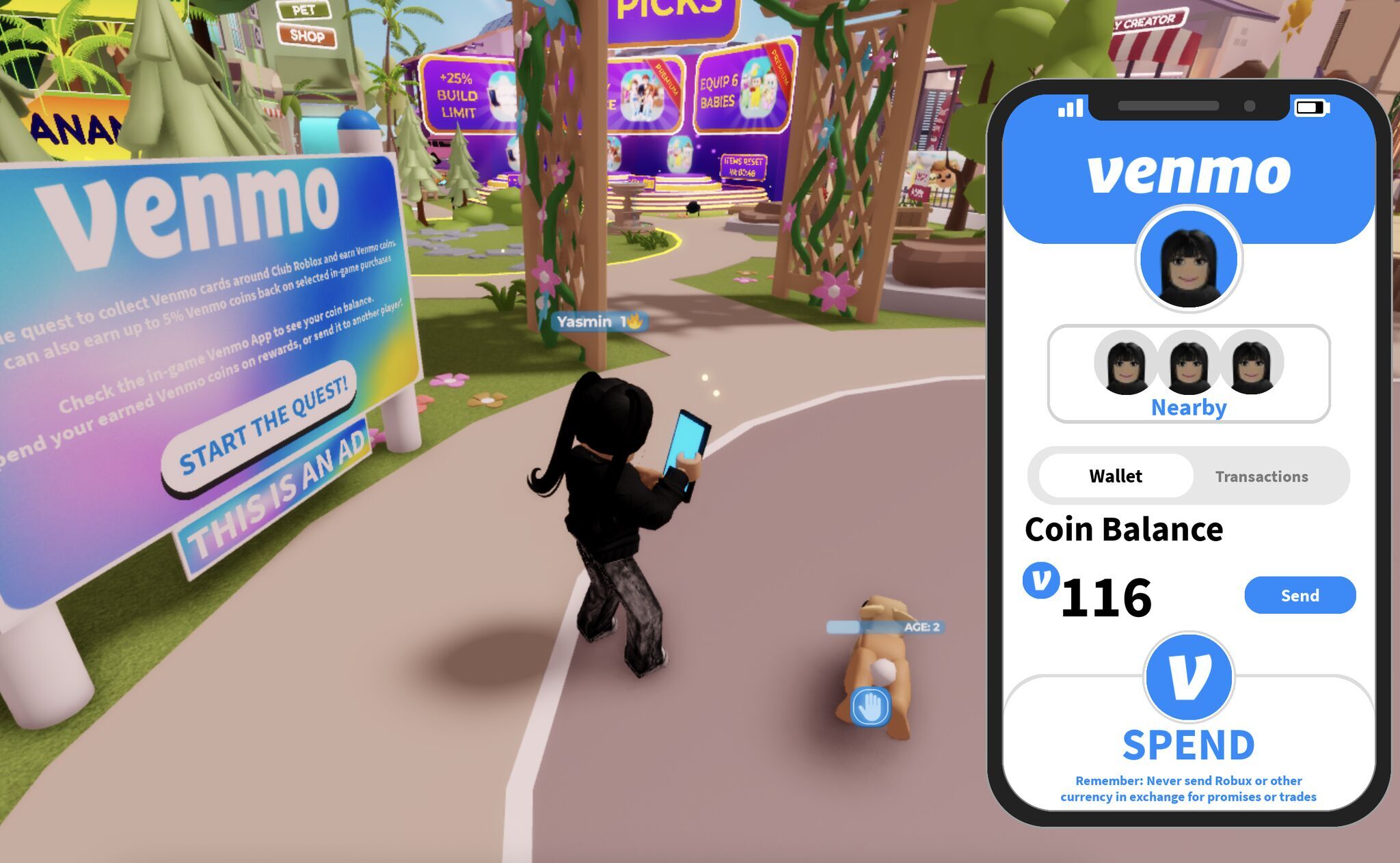

One example of a new implementation is at Roblox, which is gradually rolling out age verification for users who want to access a new voice chat feature. As Roblox continues to diversify its appeal beyond kids, the company is working to maintain a safe environment for its youngest users.

Most laws, including GDPR, require a proportionate approach to age verification—the greater the potential risk to children, the more stringent the verification method should be. Kids face a range of potential harms online, many of which may be context-dependent, which makes it difficult to apply a single standard for age verification. For example, risk of harm in communications channels may vary widely between types of communities and types of platforms, eg social media vs online gaming vs streaming platforms.

Age verification is here to stay. In fact, the number of kids protected by laws is only continuing to increase as regulations evolve. A recent proposal in Australia seeks parental consent for kids up to 16. Although the bill is aimed at social media platforms, the proposed scope also includes “multiplayer online games with chat functionalities.” Similar proposals are being made around the world, including for COPPA as mentioned earlier.

SuperAwesome and Epic Games recently announced that its parent verification services are now free for all game developers in an effort to make it easier to build safe experiences for kids online.

What else mattered:

- Leading apps, including popular social media and gaming platforms, have been accused of violating the UK’s new Children’s Code. UK charity organization 5Rights Foundation submitted a complaint to the Information Commissioner’s Office (ICO) alleging that there are still widespread violations of the Code including design tricks, nudges, data-driven features that serve harmful material, and insufficient assurance of a child’s age before inappropriate features like video-chat.

- 5Rights Foundation’s Digital Futures Commission, a research project led by Sonia Livingstone of the London School of Economics, published “Playful by Design”, a new report and presentation analysing how children play in the digital world, and calling on developers to enable more creativity whilst continuing to invest in child safety. The report analyzes kids’ engagement with a number of leading online platforms, including social media and games.

- UK digital identity firm Yoti announced that its Facial Age Estimation tech can now be used reliably for children under 13. According to the company, its technology uses AI-based facial analysis that preserves users’ privacy. While this may be a potential breakthrough for the industry, the ICO recently said that age estimation technology as a whole is not reliable enough for commercial use, a point Yoti is pushing back on.

- The UK continues to lead the way in digital safety regulation and guidance. UK Communications regulator Ofcom rolled out more guidance for video-sharing platform providers on measures to protect its users from harmful or restricted material. The UK’s leading trade body for advertisers, the Incorporated Society of British Advertising (ISBA), also launched a new Code of Conduct for Influencer Marketing. The code sets out best practice for advertisers, brands, talent agencies as well as influencers. The new ISBA Code provides a clear mandate to ensure users see the disclosure ‘#ad’ before engaging with the commercial content.

- While Google CEO Sundar Pichai is calling for federal privacy legislation to mitigate against the cost of complying with a patchwork of state laws, the search giant is being accused by several states’ attorneys general of seeking fellow tech giants’ help in stalling kids’ privacy protections.

- In other Google news, YouTube announced an algorithm update that will automatically mark “Made for Kids” (MFK) content as either high or low quality. YouTube followed this up with a warning that it will start to demonetize low-quality MFK content.

- UNICEF released “Promoting diversity and inclusion in advertising: a UNICEF playbook,” The handbook characterizes different types of stereotyping that can be harmful to children and provides tools for businesses to create guidelines and strategies to ensure diversity and inclusion are implemented in their content and products for kids.

Stay safe and be well.

Warm regards,

Katie Goldstein

Global Head of KidAware